Almost every design team I've spoken with lately is experimenting with AI agents.

Many teams are also confused about agents and unsure where to start. Most want to know where agents actually help—and where they don't.

This article pinpoints three design workflows where agents deliver real value today, plus a quick guide to picking the right type of agent and a few places to avoid them.

In this article, you'll learn:

- What agents are and how they fit into the design workflow

- When continuous research synthesis agents save 15+ hours/week

- How UX writing agents handle 100+ microcopy variations at scale

- Where QA agents catch design system violations before review

- Which agent platforms to actually use (and which to ignore)

- The workflow types where agents are still less useful

1) Design workflows where agents actually deliver

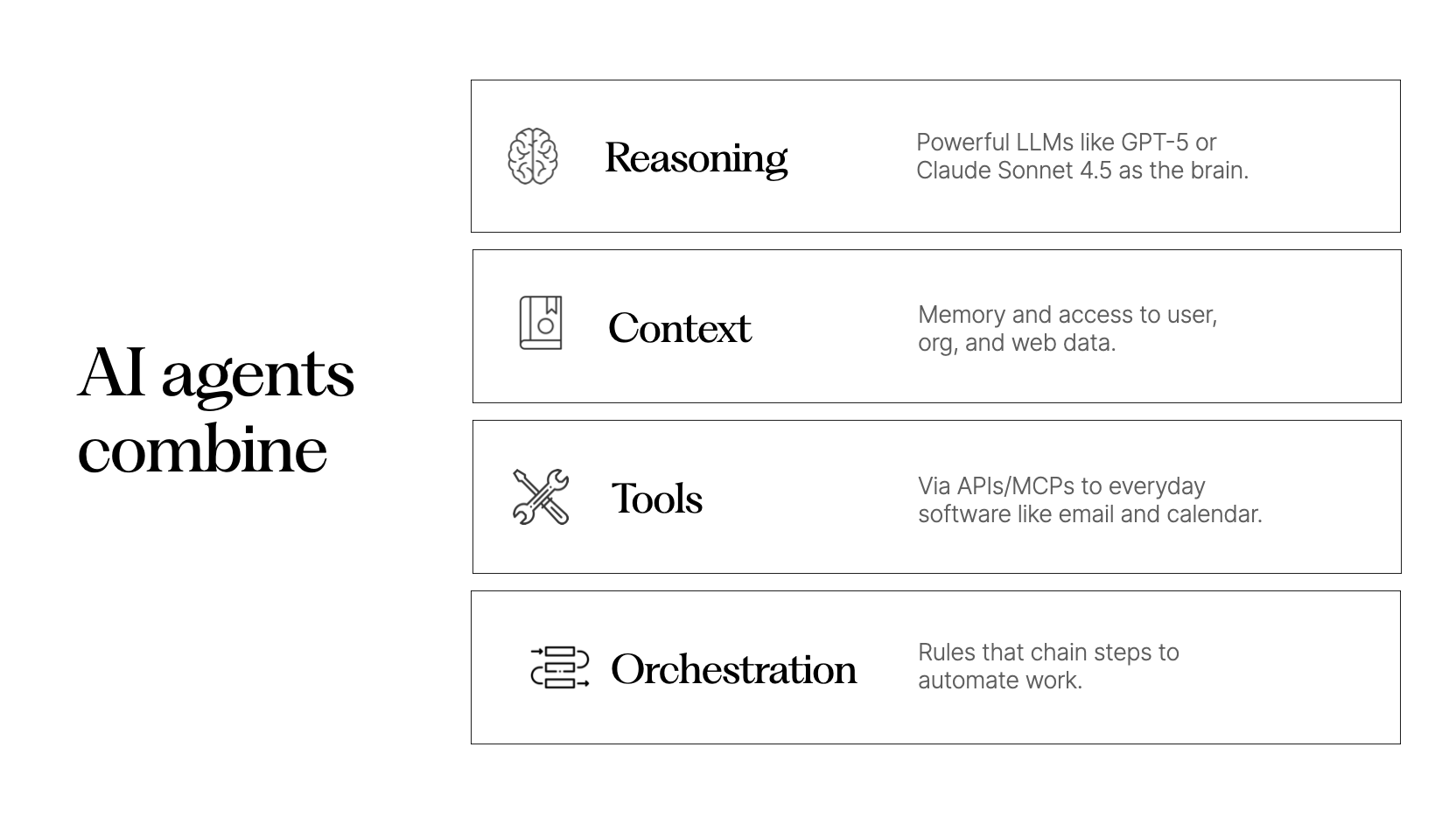

AI agents are a specific tool in the design process. They accomplish multi-step goals for users autonomously or semiautonomously (often with a human-approval step) by combining:

- Reasoning with modern LLMs (e.g., GPT-5, Claude Sonnet 4.5) as the brain.

- Context from your sources (docs, databases) plus memory of prior interactions.

- Tool use via APIs/connectors to everyday software (Figma, Slack, Calendar, Gmail, Google Docs, Shopify); MCP is an open protocol many teams use to standardize these connections.

- Orchestration—simple rules/flows (and optional triggers/schedules) that chain steps to automate work.

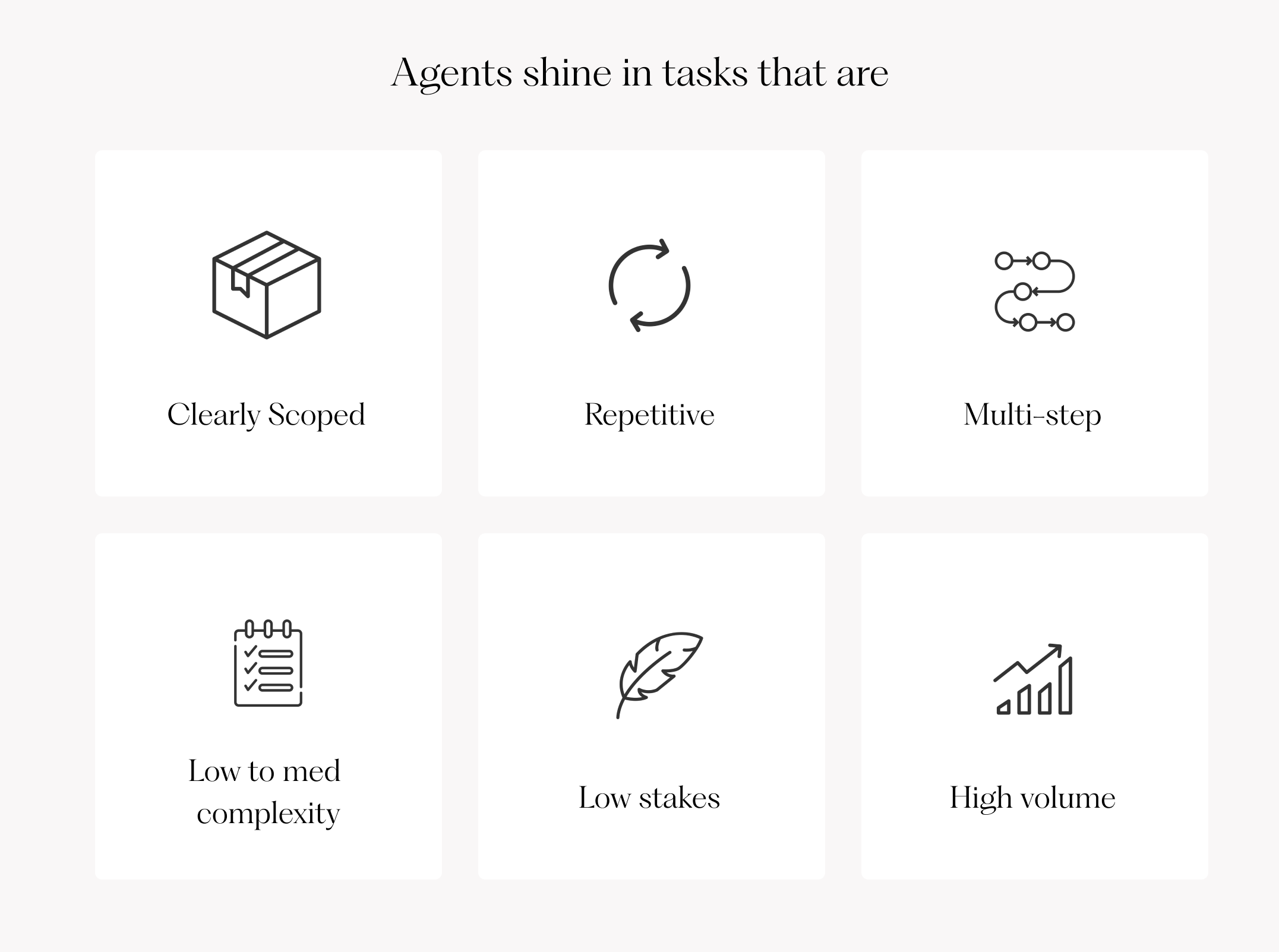

Given these characteristics, agents shine when the work is clearly scoped, repetitive, multi-step, low to medium in complexity, low-stakes, and high-volume.

Let's examine three types of workflows in which agents can deliver to design teams.

1) Continuous research synthesis

If you're getting hundreds or thousands of customer inputs weekly (tickets, reviews, interviews), that's more than humans can scan—and a missed insight cost.

In these scenarios, a research agent can autonomously generate continuous customer insights in the background.

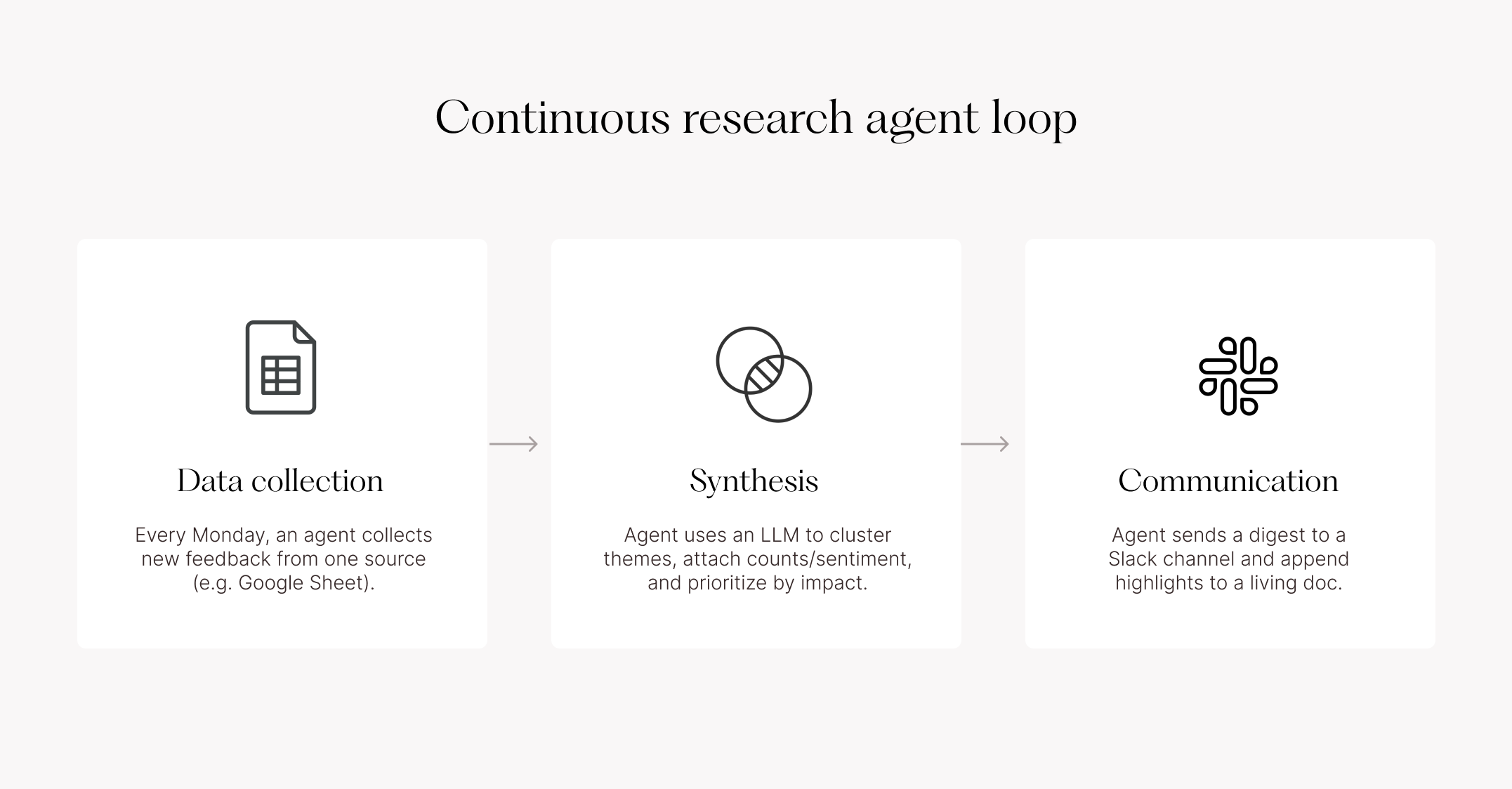

Research agent loop example:

- Every Monday at 10:00, an agent collects new feedback from one source (e.g., App Store reviews or a Google Sheet).

- Agent uses an LLM to cluster themes, attach counts/sentiment, and prioritize by impact.

- Agent sends a 1-page digest to the product Slack channel and append highlights to a living insights doc.

I’ve built this type of agent for my business using Lindy AI —scanning new client feedback automatically from a Google Sheet, messaging me with insights on Slack, and updating a living doc.

Why this works: Agents are excellent at pattern-matching across many data sources. Start with one source and expand once the weekly digest proves helpful. Humans should always spot-check the insights—AIs can overgeneralize.

The same agent structure can also be used to scan industry trends from several newsletters, blogs, or podcasts.

2) High-volume UX writing

Microcopy quality drives experience, and scale drives pain. Agents help when you need many consistent strings reviewed against tone and constraints.

For example, I have a client in the finance industry experimenting with AI agents to write understandable microcopy for complex personal finance topics in 8 languages.

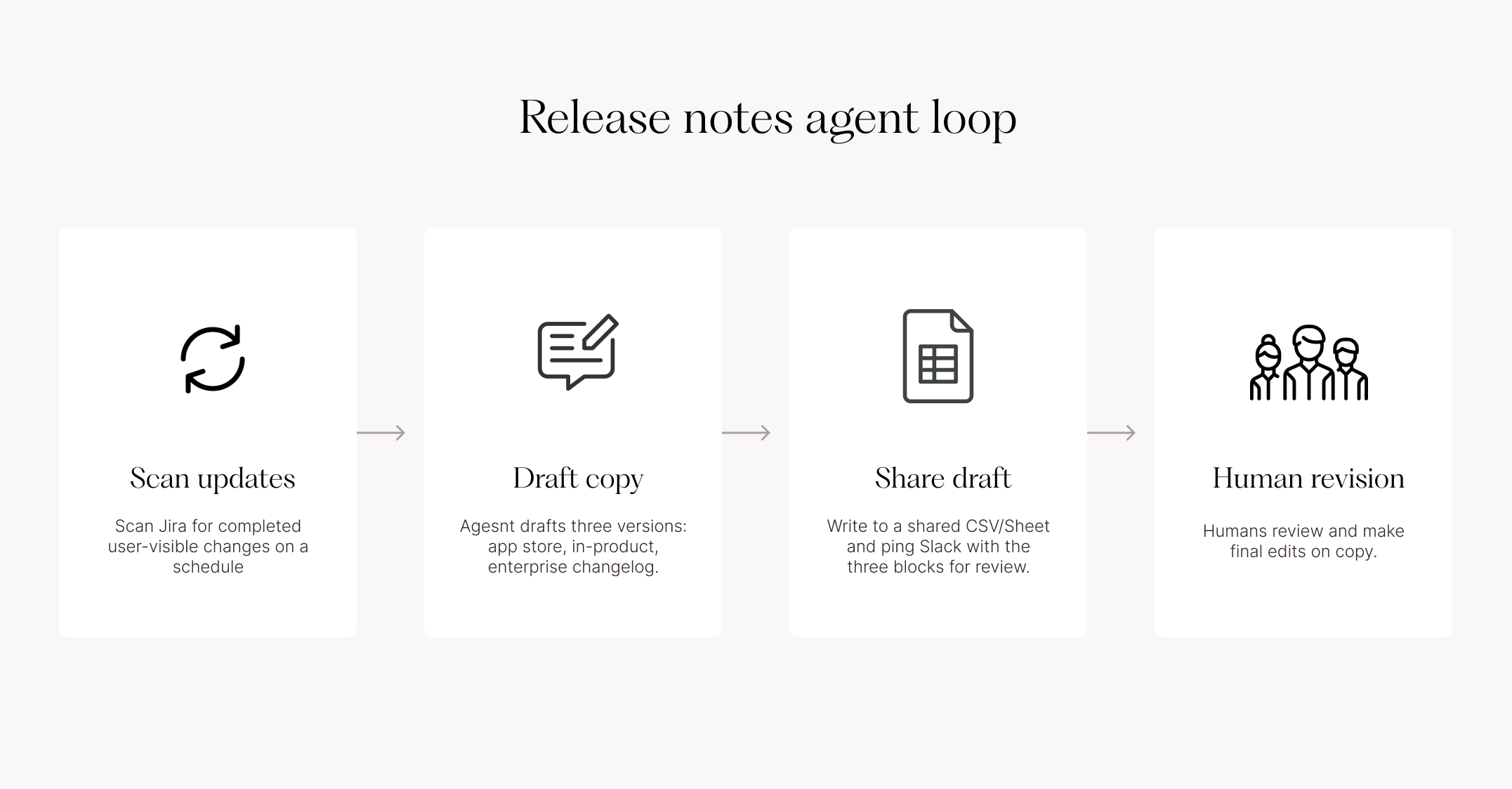

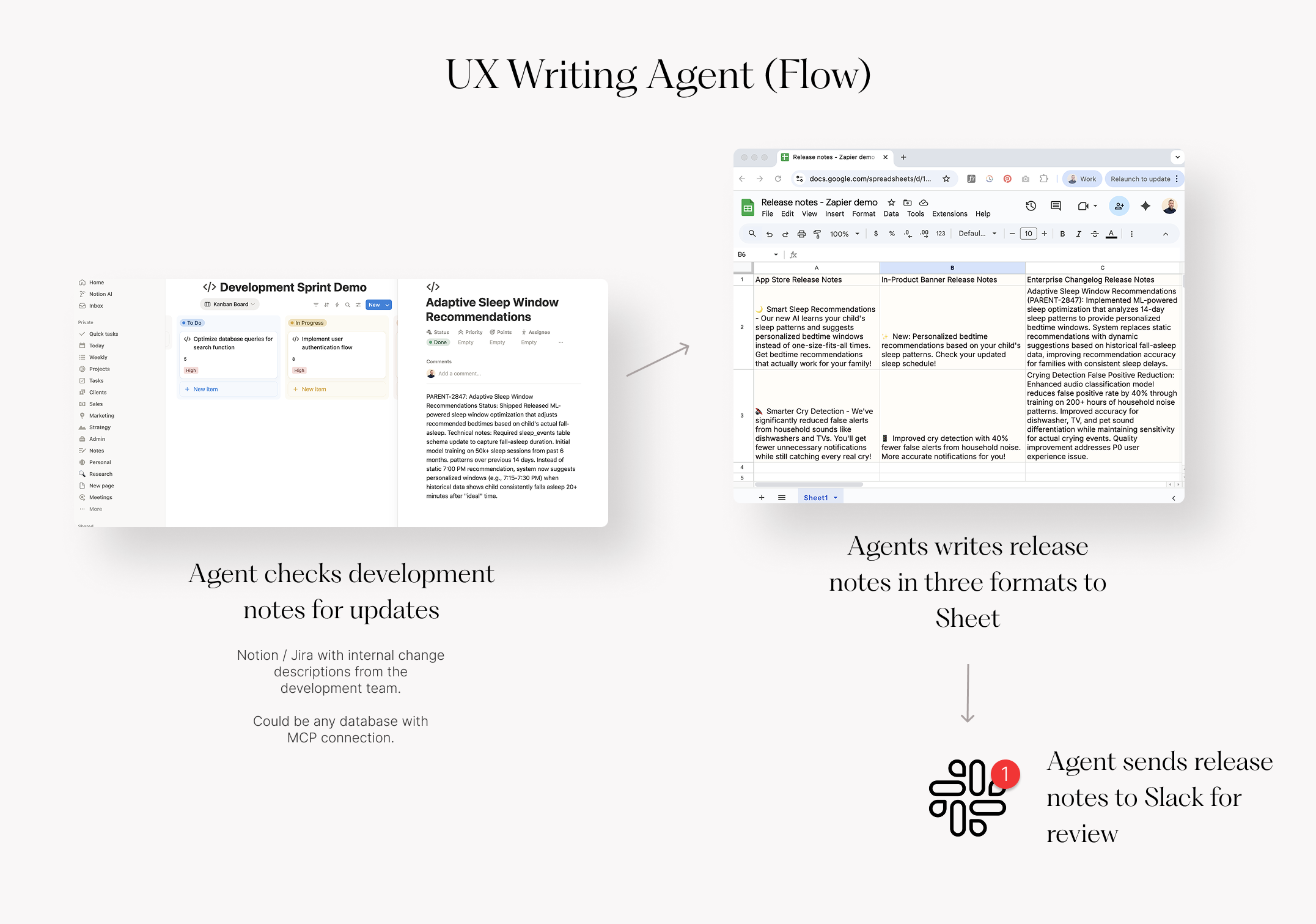

One area of continuous, high-volume writing is product release notes. Here’s an example of an agent loop that automatically writes them.

Release notes agent (example):

- Scan Jira/Notion for user-visible changes development team has made every day or week

- Draft three versions of release notes based on changes: app store, in-product banner, enterprise changelog—Grounded in your brand style and copy specs.

- Write to a shared CSV/Sheet and ping Slack with the three blocks for review.

- Humans make final edits.

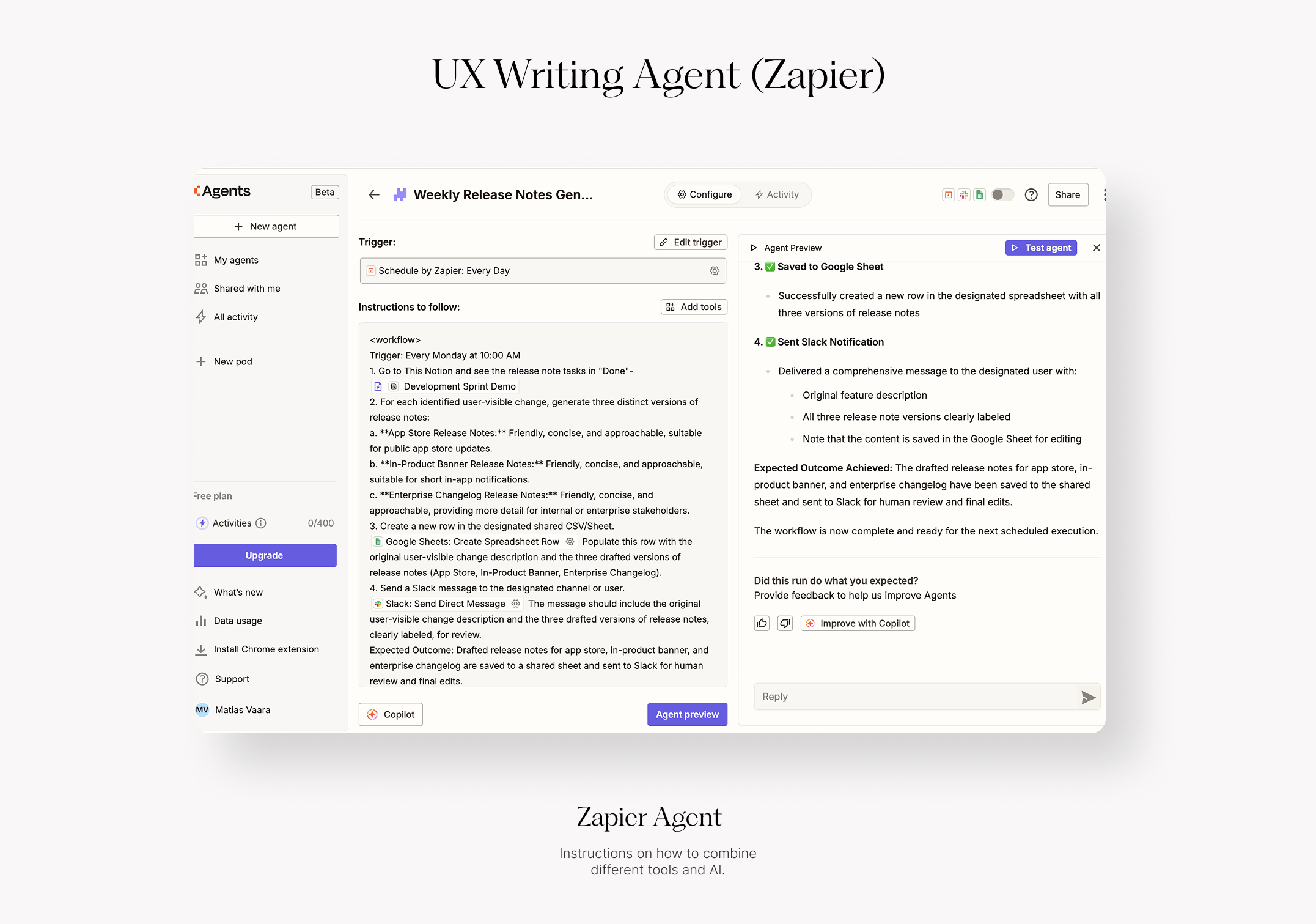

I built a shareable demo version of this flow using Zapier Agents, Notion, Google Sheets, and Slack. See screenshots below for a concrete example of how the agent loop works.

Guardrails that matter: enforce brand voice, ban/require terms, reading level ≤ 8th grade, and require approval before anything ships.

3) Automatic quality assurance

QA is repetitive, rule-based, and benefits from extra eyes. Think of an agent as a sous-chef—it inspects everything leaving the kitchen, with humans as the ultimate quality control.

What it can check:

- Consistency: tokens/styles applied, spacing on the 8-pt grid, corner radius, deprecated components.

- Accessibility: contrast (≥ 4.5:1 for text), tap target sizes, keyboard focus order, "no color-alone" cues.

QA Agent loop:

- Input a Figma artboard of design (via MCP server or screenshots).

- Evaluate against your design-system rules + WCAG checklist.

- Cluster findings and propose safe fixes (e.g., swap to the correct token).

- Give structured feedback on fixes from critical to nice-to-have. Can include hallusicinations, but usually 80-90% on point.

As a sharable demo, I created a Cursor agent trained on a public Untitled UI design system documentation. In the screens below, you can see a comment on a frame I gave as an example, including some obvious mistakes. It caught my button contrast issue and correctly pointed out 5 other, less obvious problems. For this use case, I found GPT-5 performed the best, both in Cursor and as a custom GPT.

The Figma MCP connection is handy, but it is prone to connection issues. In these cases, a screenshot input works as a backup.

To scale this type of QA Agent beyond Cursor users, it could be wrapped in a Figma plugin or a simple(ish) web application.

2) How to build these (choose your starting point)

Now that you've seen where agents work, here's how to actually build them.

The key decision: How much control vs. speed do you need?

Quick Decision Flow

→ Need a quick, simple agent (Q&A, single-step tasks)? → Lightweight assistants

→ Ready to automate multi-step workflows? → Automation builders or Enterprise platforms

→ Rolling out org-wide? → Enterprise platforms

→ Want to build custom agents with code? → Coding agents or Developer frameworks

→ Complex requirements? → Developer frameworks

Platform Comparison

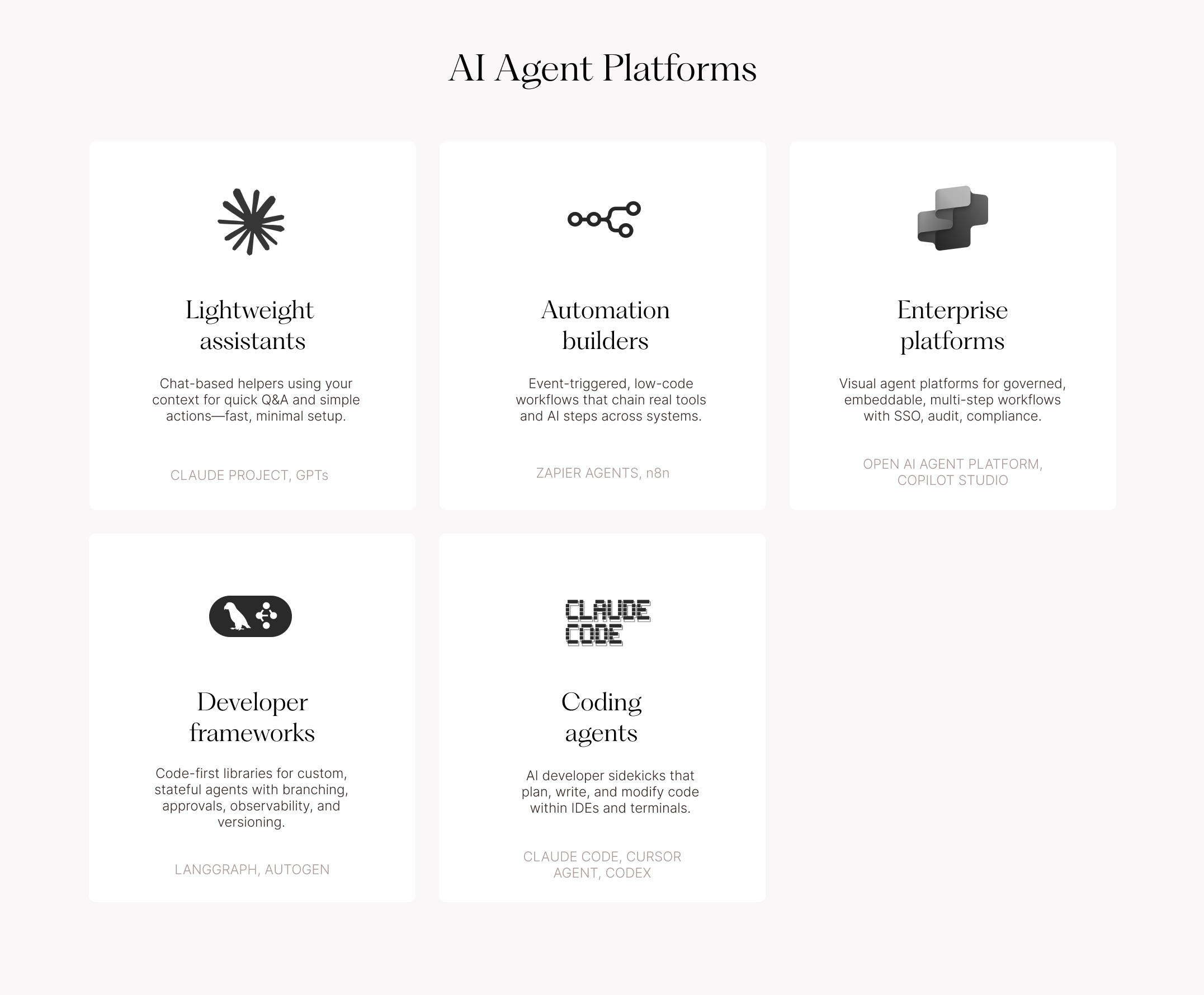

Agents can be brought to life in many different ways. Here’s a quick comparison of platforms.

1) Lightweight assistants (Claude Projects, GPTs)

- User-initiated, conversational flows (”mini-agents”)

- Simple tasks and Q&A

- Simple tool connections (e.g., Google Workspace, web search, file access)

- Best for quick agents without complex orchestration

2) Automation builders (Zapier Agents, n8n, Lindy AI)

- Multi-step workflows connecting real tools (Slack/Drive/Jira)

- Triggered by events (new ticket, calendar update, etc.)

- Low/no-code visual builders

- Best for glue work between systems

3) Enterprise platforms (OpenAI Agent Platform, Copilot Studio, Vertex AI)

- Org-wide deployment with governance

- SSO, audit logs, data residency controls

- Admin oversight and usage monitoring

- Built for compliance requirements

4) Developer frameworks (LangGraph, AutoGen, Semantic Kernel)

- Custom rules and complex logic

- Full control over agent behavior

- Version control and observability

- Requires engineering resources

5) Coding agents (Claude Code, Cursor Agent, Codex)

- Design-adjacent engineering tasks

- Token transforms, doc generators, internal tools

- Lives in IDE/terminal workflow

Start simple (when you can): Most teams should begin with lightweight assistants or automation builders.

Experiment with dummy data first to prove value quickly. Once you need to handle sensitive data through multi-step automations, move to governed enterprise platforms and loop in your IT security team early.

3) When not to use agents in design

There are still many areas of design work where agents are not an ideal solution.

Agents fall short as the primary tool for high-stakes, context-heavy work requiring taste or ethical nuance. They're also a poor fit for one-off tasks that don't repeat in similar ways—the setup cost outweighs the benefit.

Examples:

- Setting product/experience vision

- Crafting brand identity

- Navigating legal/ethical decisions and sensitive comms

- Motion/visual details that require human taste

- Final sign-off on stakeholder-facing deliverables

- Unique, one-time projects with no repeating pattern

Agents can assist (summaries, drafts, checks) but humans own judgment.

For non-repetitive and freeform tasks, simply having a well-contextualized conversation with an LLM assistant is the way to go.

How to get started (next Monday)

- Pick a workflow that's repetitive, low-stakes, low/med complexity, and well-defined.

- Sketch a tiny flow using LLMs + context + tools + rules.

- Start with a lightweight assistant for one week. If you hit limits (embedding, connectors, governance, measuring quality), graduate to an enterprise agent platform.

- Build a working demo with realistic dummy data

- Before scaling with real data, loop in engineering + legal to ensure compliance.