How can vibe coding amplify the impact of designers?

We'll explore what vibe coding actually is, then dive into three practical applications for real-world product development: exploring ideas, prototyping experiences, and collaborating with developers. We'll also lay out four principles for succeeding with vibe coding and how to get started.

Time to dust off the prolonged summer and client work break of this newsletter and jump in.

What is vibe coding and why should designers care?

Vibe coding has been one of the buzzwords of 2025.

Andrej Karpathy - formerly of OpenAI and Tesla - coined the term after confessing on X that he'd been coding purely by speaking to AI-assisted tools like Cursor, fully "trusting the vibe."

In professional settings, this concept has evolved beyond its pure vibes origins into what's now called "Responsible AI-Assisted Development" - reframing the AI not as an infallible oracle, but as a powerful collaborator that accelerates design-to-code workflows under strict human oversight.

Two forces are driving the surge: increasingly capable agentic reasoning models like Claude 4.5 and OpenAI's GPT-5, paired with AI development tools like Cursor, Claude Code, and Lovable.

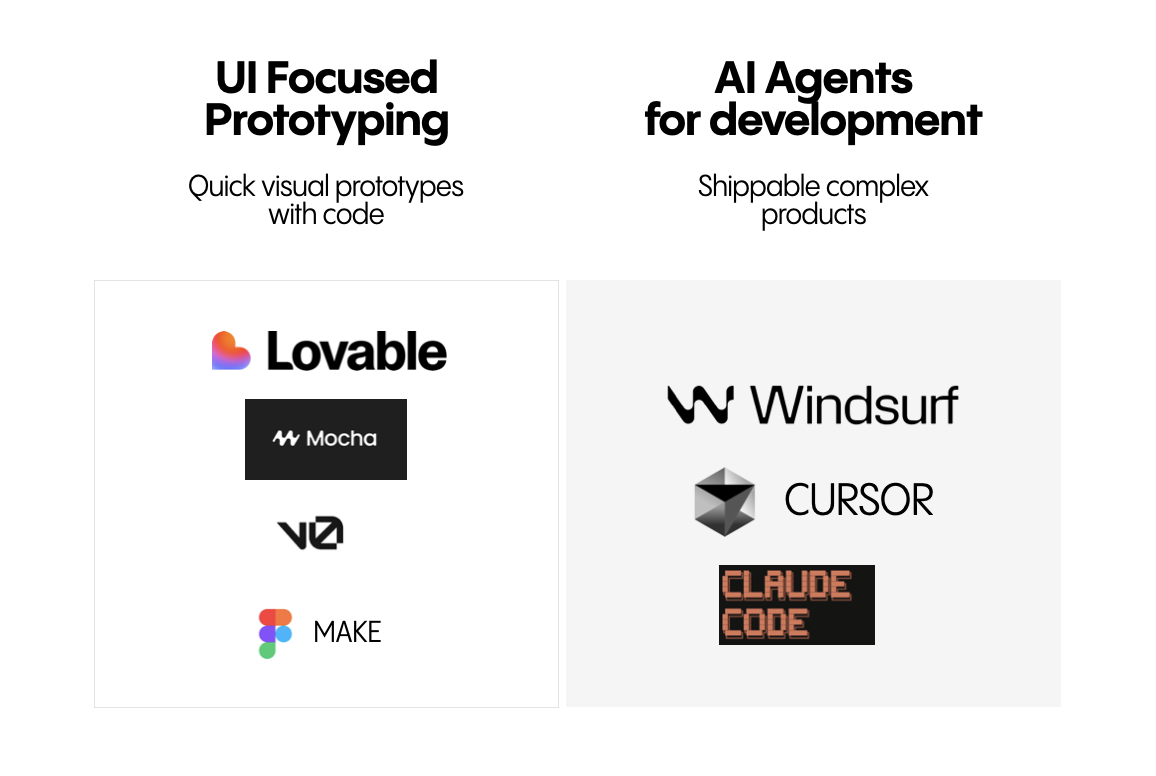

The "vibe coding" toolkit roughly divides into two camps:

Front-end focused tools like Lovable, V0 and Figma Make hide most code from users, focusing on prompting and visual front-end output. The learning curve is gentler, but they offer less control and development robustness. Notably, 63% of V0's users are non-developers, highlighting how accessible these tools have become for designers.

AI development co-pilots like Cursor, Windsurf, Codex and Claude Code target professional software developers. They work in developer environments like IDEs or terminals, are almost entirely text-based, and less inviting for non-coders. They sacrifice scaffolding for control - essential for professional software development.

Where should you start?

My recommendation for people with no coding background: start with front-end tools like V0 and Lovable. Get a few dozen practice projects under your belt, and if the coding spirit moves you, dive into the deeper end with Cursor, Codex, or Claude code.

When should designers use vibe coding?

I've experimented with around 30 vibe coding projects and run several AI training sessions with design teams.

Based on my experimentation and workshop discussions, I see three realistic areas of value:

- Exploring early ideas

- Prototyping advanced interactions

- Collaborating with developers

1. Exploring early ideas

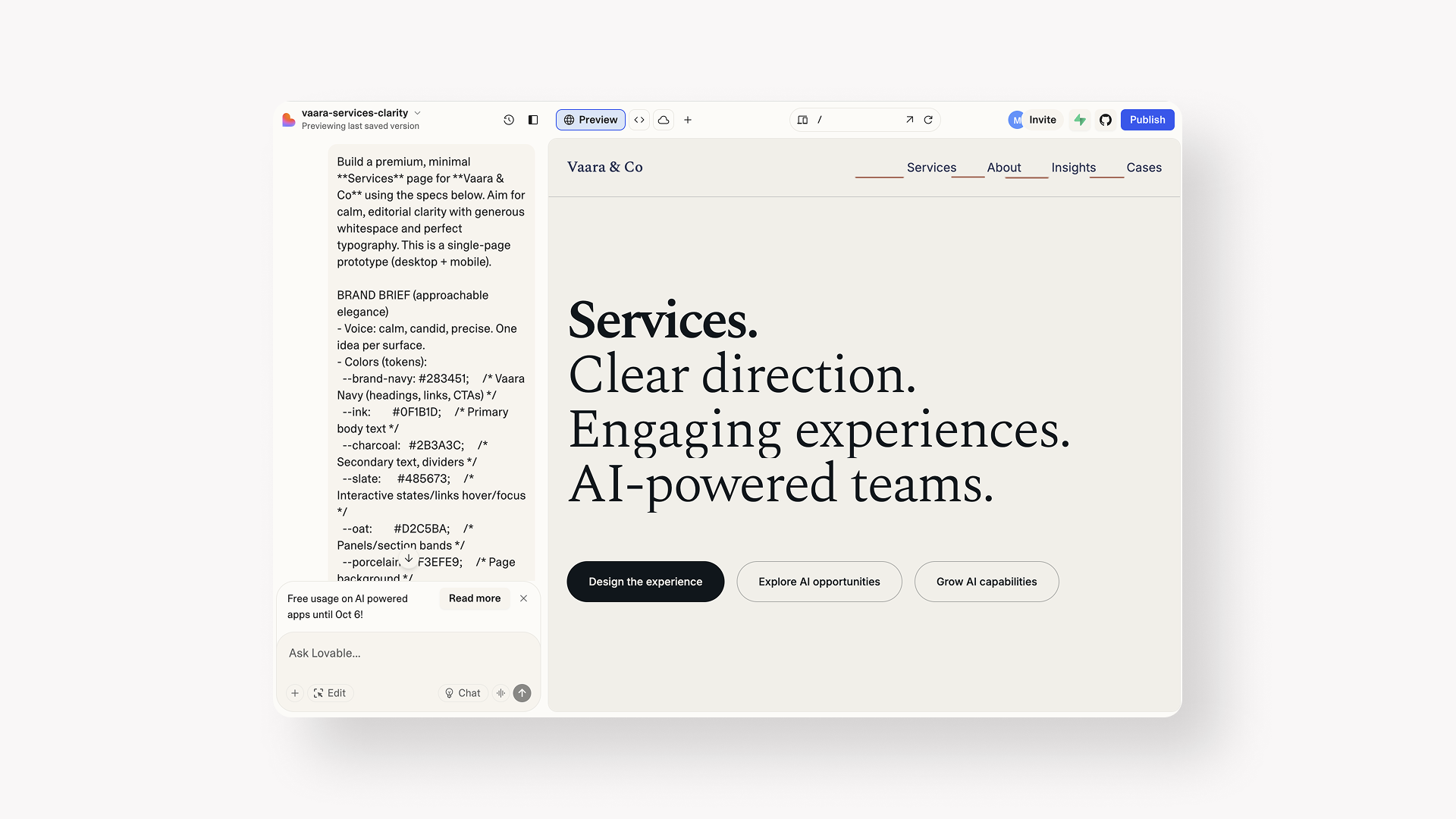

I've been updating my website lately, specifically my services section structure.

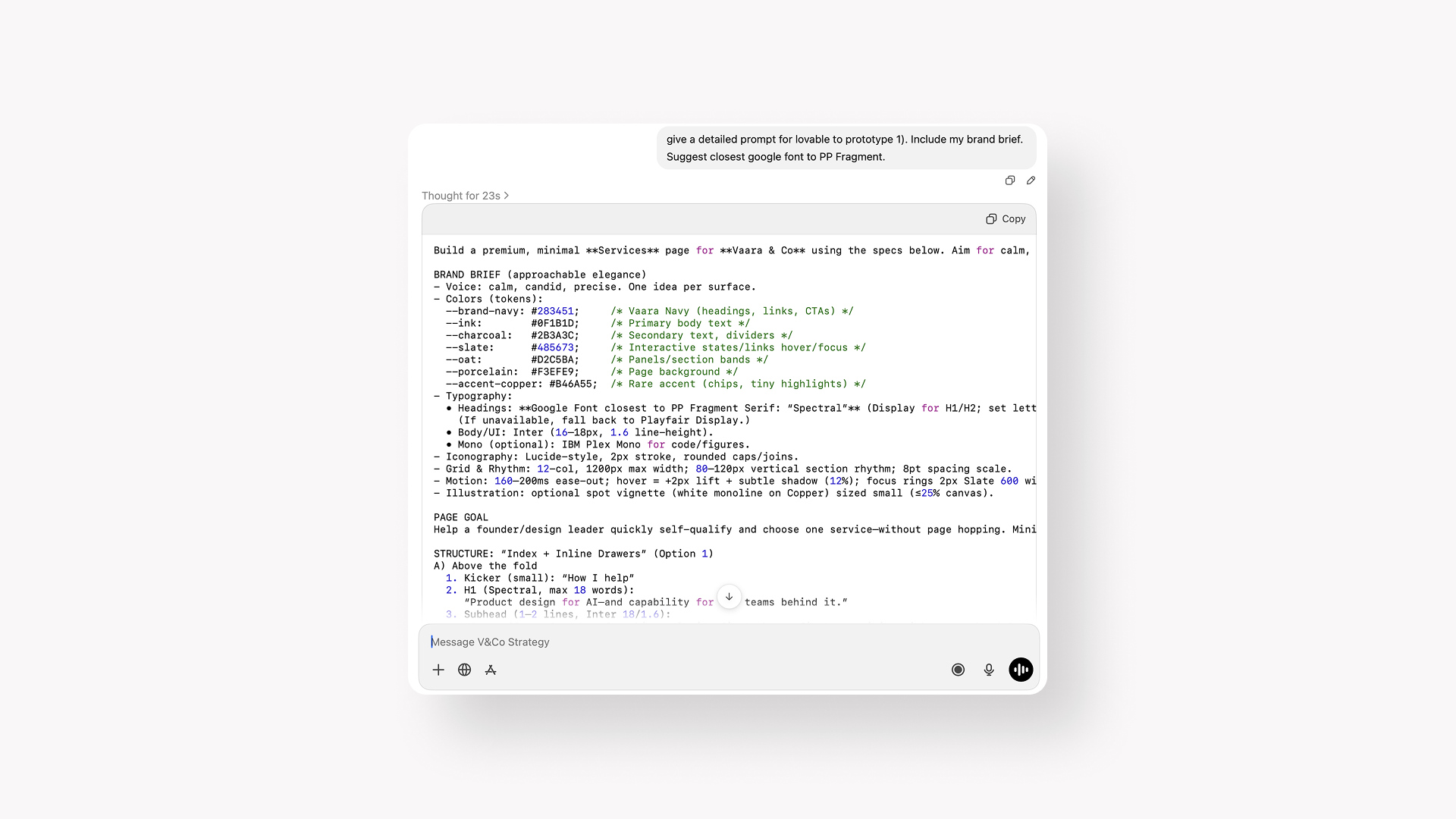

I asked GPT-5 for 5 structure options. With tons of business context already in the conversation, I wanted quick interactive prototypes of the most promising directions. So I asked GPT to create a detailed prompt for Lovable to develop the page, including my company's brand tokens like colors and fonts

In minutes, I had a working front-end prototype. It wasn't perfect in design or code, but it gave me a realistic sense of how this version would feel to users.

By combining personal LLM assistants loaded with business context and vibe coding tools, we can spin up a dozen experimental prototypes in an hour.

They won't be close to shippable, but they let us explore early ideas realistically - by ourselves or with stakeholders. To speed up the process further, we can build a rough design system with ready-to-use UI components.

Just 6 months ago, we discussed using AI design tools like UIZard or Stich to quickly explore UX ideas. Now, prompt-to-code tools like Lovable are becoming the most powerful tool for early exploration.

This acceleration fundamentally changes the economics of experimentation - we can now validate exponentially more ideas before significant engineering investment, leading to more robust and user-vetted solutions.

2. Prototyping advanced interactions

Most designers communicate UX ideas in still images in Figma, stitched together to form clickable prototypes.

Modern software experiences demand more: motion, sound, camera use, haptic feedback, real data - not to mention deep AI integration. Vibe coding bridges this gap.

As an experiment, I wanted to see how quickly I could create a prototype of an extremely simple kids' game using video, sound, and haptic feedback. A game built completely around sound and haptic mobile feedback would be impossible in Figma.

In a few hours with Cursor, I had a playable mini-demo for my 4-year old user tester. The dinosaur counting game's first version was delightful with Finnish voice-over and satisfying haptics.

The same holds for prototyping with real data and AI models. I built a handful of mini-experiments using the Claude API, including WanderWise - an app that helps users plan their ideal holiday with AI itinerary building.

Conversational and agentic user experiences only become real with actual data.

As non-developers become comfortable with vibe coding, their intuition for designing complex experiences widens. The feedback loop tightens - experiencing how a complex animation, haptic feedback, or AI integration actually works makes you exponentially better equipped to iterate on it.

3. Collaboration with developers

Comfort with vibe coding tools can remove boundaries between developers, designers, and product leaders.

Designers can sharpen their ability to describe motion using code rather than vague hand-waving or Figma hacks. Emerging capabilities like the Figma MPC server are further bridging the worlds of design and code. As non-coders build mini-experiments with AI model APIs, they develop a more concrete sense of what experiences are possible with deep AI integrations.

"The boundaries between developers and designers are becoming more fluid" says Anthropic design lead Meaghan Choi. "The code is the ultimate source of truth (…) the more we can (as designers) experiment in real code, the better". At Anthropic, everyone from designers to product leaders experiments with the real code base using Claude Code. Engineers still own code review and production releases.

"The boundaries between developers and designers are becoming more fluid" says Anthropic design lead Meaghan Choi.

At Notion, designers often start by asking the AI to analyze or explain the existing state before making changes. When adding a feature to a complex app, Notion's team first asked Claude "Why is this implementation the way it is?" The AI dug through code and summarized the status quo, which then informed the precise instruction for the change. This two-step dialogue (investigate, then implement) helps the AI ground its response in real context, reducing wild guesses.

The results speak for themselves: AI assistants now write an estimated 30-40% of Notion's code. In one case, an engineer prompted the AI to "Add full-screen zoom to mermaid diagram charts" and after just 30 minutes of human-led refinement, the feature was complete - a task that would have previously taken days or weeks.

Vibe coding tools are becoming a shared platform for designers and developers to shape live products.

How should you use vibe coding?

Four key principles for getting the most out of vibe coding tools: starting with real context, creating a detailed plan, iterating piece by piece, and adding your own thinking and polish.

1. Start with real context

The more you provide the model with your real business context, the more relevant results it'll produce. Useful context includes:

- Real customer insights

- Business challenges

- Design system (as screenshots, JSON files, or direct from Figma with MPC)

- Reference screenshots of relevant UIs

- Your own design mockups from Figma

For a light design system, ask a model like GPT-5 to create a written description based on screenshots - covering both high-level UX patterns and token-level details like button styles, border radius and typography.

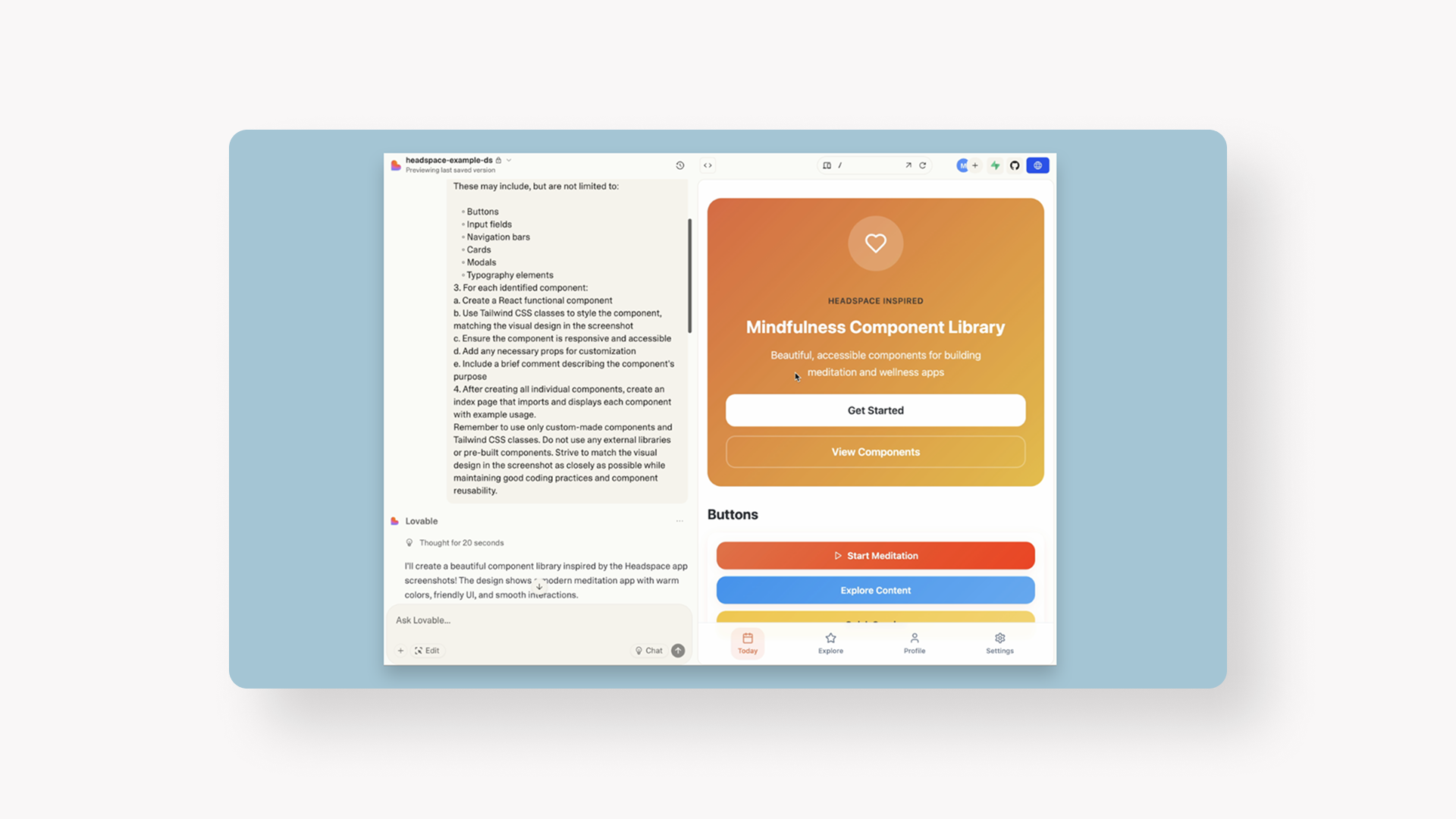

For a more comprehensive component library, ask Lovable or Cursor to create an HTML page with real components for your brand based on screenshots. This component library acts as a foundation for future sessions. As an experiment, I created a Headspace themed component library with Lovable based on screenshots of the actual Headspace product.

A common best practice is to create a manifest file (like CLAUDE.md or Lovable instructions) that lists your design tokens, coding standards, and project conventions for the AI to reference consistently across sessions.

2. Create a detailed plan

With vibe coding, "measure twice, cut once" applies". A detailed plan directs the AI-development model in the right direction.

My preferred workflow: Have a detailed conversation with GPT-5 or Claude about what you're designing. With all the context loaded, ask the AI assistant for a detailed prompt for Lovable. After reviewing, copypaste this plan into Lovable directly.

For slightly more complex projects with Cursor or Claude Code, I start with a more robust Product Requirement Document (PRD) created together with AI. This lays out a detailed plan for the model to build piece by piece.

3. Iterate piece by piece

AI development tools get confused with tasks that are too large. Break them into manageable sizes.

Start with one feature or section of a page. Once you have a first version, iterate with the smallest possible requests ("Change all button corner radius from 4 to 24"). The more self-evident the change, the likelier the model will comply.

Version control is your friend. Models can go rogue and one seemingly minor tweak can break things elsewhere. Most tools like Cursor or Lovable let you go back in time. For more complex projects, diff and revert via Git become essential.

An emerging quality assurance pattern is to prompt the AI to generate unit tests for its own code, helping validate that features work as intended before moving to the next iteration.

4. Add your own thinking and polish

At their best, vibe coding tools serve as our exploration and prototyping assistants. It's still on us as designers and product leaders to hold the reins and own the product vision.

I recommend working in loops with the models - taking your own thinking or designs as a starting point, exploring directions or interactions with AI, then taking the work back to your hands for final direction and polish.

Given too much leeway and not enough opinionated direction, vibe coding tools produce mediocre results. Because AI models are trained to produce statistically probable outputs, their solutions often reflect a "lowest common denominator" of existing designs. This can lead to homogenized experiences - the dreaded "same pastel dashboard" vibe - devoid of unique brand character. Your role as curator and editor is essential to inject creativity and ensure the final result has soul.

Critical caveats to keep in mind

While vibe coding offers powerful capabilities, it comes with serious limitations that design leaders must understand.

The very fidelity of AI-generated prototypes can mislead non-technical stakeholders into underestimating the time and rigor required to build secure, scalable, maintainable products. You must clearly communicate that these artifacts are design validation tools, not nascent codebases.

AI-generated code is often brittle, poorly structured, and lacks documentation—optimizing for "working now" not "working forever." As Ruby on Rails creator David Heinemeier Hansson (DHH) put it, models can confidently "slush from one bug to another endlessly," fixing one issue while breaking three others. What works for 10 users can collapse at scale. AI excels at getting you to 80% done in 20% of the time, but that final 20% still takes 80% of the effort.

Beyond code quality, AI models trained on public code can reproduce common security vulnerabilities, and systems trained on biased data will amplify those biases—potentially excluding demographic groups or failing accessibility standards.

For designers: use vibe coding to validate concepts and explore interactions, but defer to professional developers for security review, ethical considerations, and anything facing real users at scale.

Vibe coding as a tool, not the driver

In 2025, vibe coding can be a potentially useful addition to designers' and product leaders' toolkits. It can help us explore ideas, prototype with higher fidelity, and collaborate with developers on a deeper level.

It shouldn't be the driver. We should retain a tight grip on product experience vision, 100% polish, and not relinquish our own creativity.

A dose of realism is needed. Vibe coding tools are not magic, and LLMs hallucinate confidently.

Recently, GPT-5 on Cursor destroyed my iOS project completely.

For complex software deployment in real live products, professional developers are essential to ensure smart architectural choices, sound release control and robust security.

With those caveats in mind, I'd encourage all non-developers working with digital products to start "feeling the vibes" and experimenting with AI coding tools. This shift from hands-on creator to strategic conductor of systems represents a fundamental evolution in our role - where value is increasingly defined by our ability to frame problems, conduct deep user research, and provide the critical, human-centered vision that guides AI execution.

Your 30-day learning path

Ready to get started? Here's a practical roadmap to dive into vibe coding:

Week 1 - Get your hands dirty with simple projects

Start with three throwaway projects in Lovable or V0: a personal landing page, a simple calculator, and a photo gallery. The goal isn't perfection—it's understanding how prompting works and what these tools can actually do. Expect to feel clumsy. That's normal.

Week 2 - Build your component library

Create a basic design system or component library with 10-15 components: buttons, form fields, cards, navigation patterns. Use screenshots of your company's existing design or a design you admire. This becomes your reusable foundation. Save this project—you'll use it constantly.

Week 3 - Integrate with your real design system

Now bridge to your actual work. Take a recent Figma design you've created and try to recreate key sections using vibe coding tools. Reference your component library from Week 2. Focus on matching visual fidelity and understanding where the tools struggle. In Lovable, you can add instructions at the project level that specify your brand colors, typography, and component styles for consistency.

Week 4 - Create a stakeholder-ready prototype

Pick a real design challenge you're currently facing—maybe an interaction you've struggled to explain, or a concept that needs validation. Build a functional prototype you can actually test with users or present to stakeholders. Include a clear disclaimer about it being a validation tool, not production code.

If you're feeling confident and need more control or complexity (like integrating real APIs or advanced interactions), this is the week to experiment with Cursor or Claude Code. Start with a PRD and tackle it piece by piece.

Good vibes!