Introduction: Human-Centered, AI-Amplified Research

The AI-enabled Research Paradox

There's never enough research in design.

You should be talking to 15 users, not 3. Reading all 2,347 support tickets, not the summary. Analyzing 12 competitors, not 3. But there aren't enough hours, and research budgets are always first to be cut. You make do with less and hope you're not missing the critical insight.

AI tools for design research promise to solve this: faster planning, instant data collection, automated synthesis, reports in minutes.

But push too far and you're in an artificial echo chamber—AI generating synthetic findings from hallucinated data. Real insights come from humans: how we think, feel, and behave. Not AI's confident guesses.

Human-Centered, AI-Amplified Research

Through training 1,000+ designers on using AI, I've developed a framework: human-centered, AI-amplified research.

Human-centered means:

- You set strategic direction and decide what questions to ask

- You interpret what insights mean for your users

- You validate findings against reality, not AI's confidence

AI-amplified means:

- AI researches dozens of sources and handles transcription, coding, and initial synthesis

- AI processes 50 interviews while you process 5

- AI finds patterns you'd miss in massive datasets

You own the strategy and judgment. AI owns the execution and scale.

Here's what this looks like: You spend 20 minutes with AI planning a study that used to take 2 hours. But you review every question for business context. AI transcribes and themes 30 interviews overnight. But you identify which patterns matter, because AI lacks taste and strategic judgment. AI generates five persona variations. But you validate them against real data, because AI hallucinates convincingly.

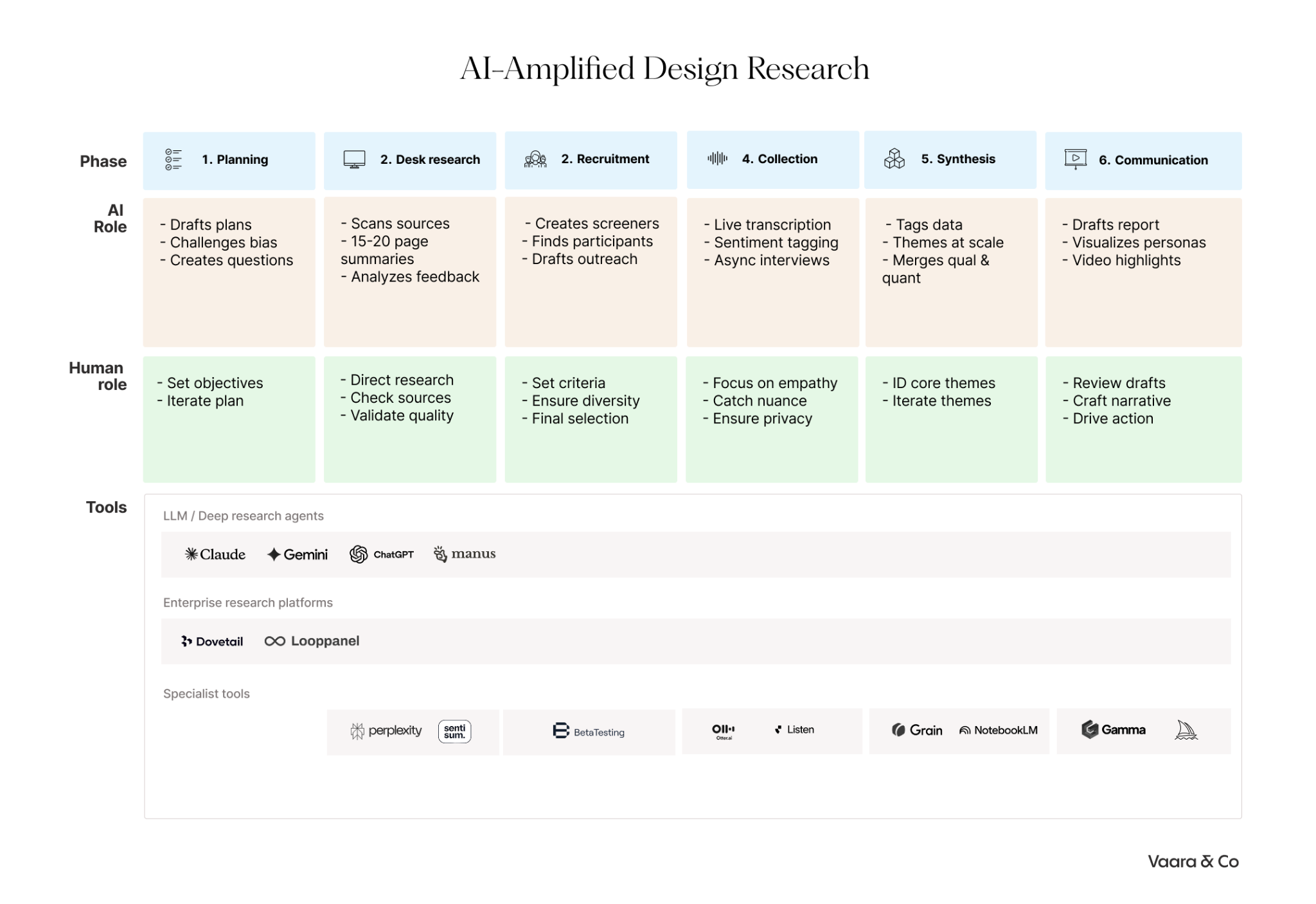

This balance plays out across six stages of the AI-powered research process:

- Plan your research

- Power desk research

- Recruit participants

- Collect data from real humans

- Synthesize mountains of insights

- Communicate findings

1. How to Plan Design Research with AI

Great research starts with a robust plan. You own the big picture: business objectives, insights you're seeking, and how they'll drive results.

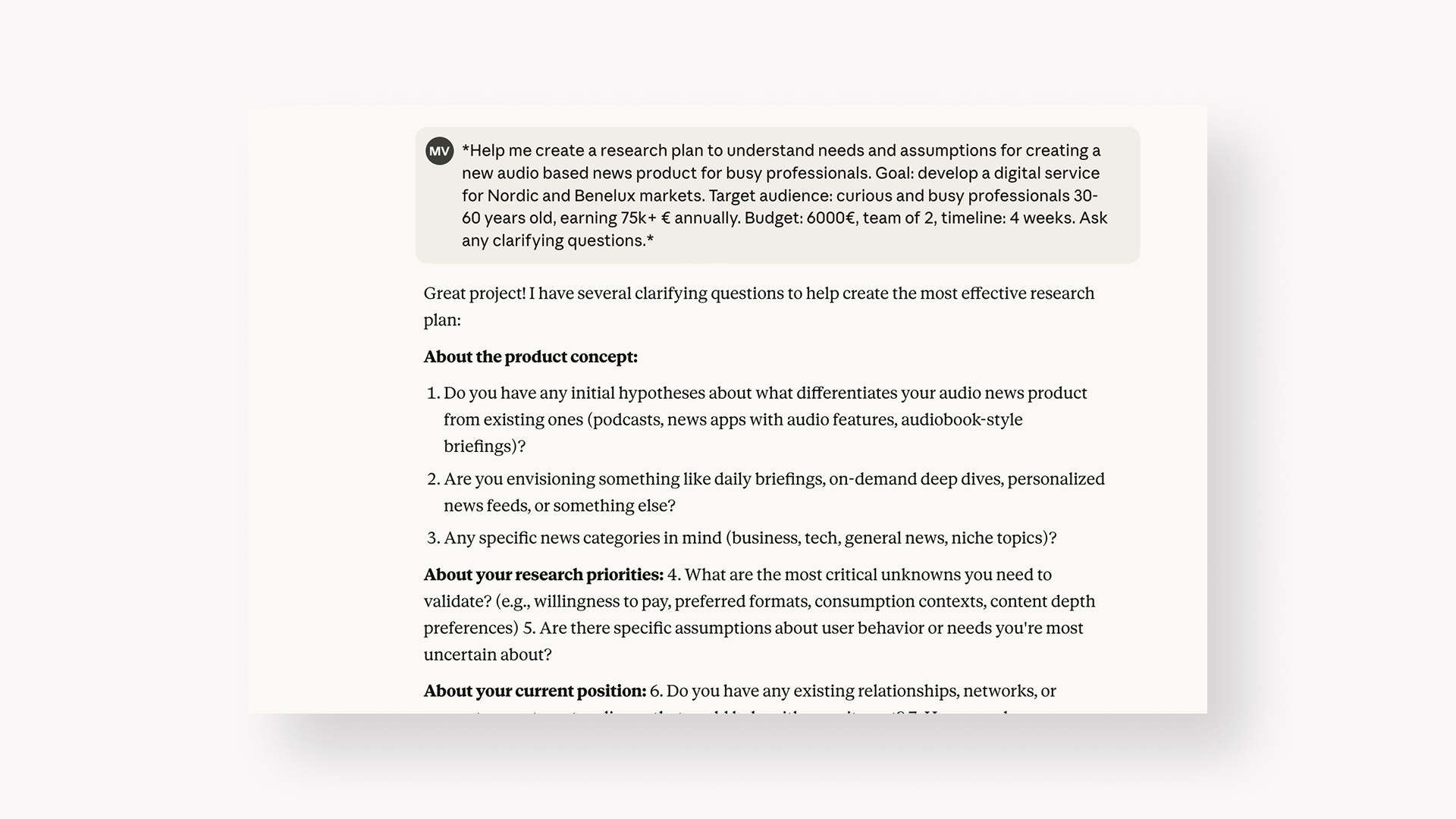

An LLM assistant like Claude or ChatGPT becomes your planning partner. Set the scene with:

- Your business and design challenge

- Target user or customer segments

- Research constraints: budget, timeline, team

Always prompt the LLM to ask clarifying questions.

Example AI research planning prompt: "Help me create a research plan to understand needs and assumptions for creating a new audio based news product for busy professionals. Goal: develop a digital service for Nordic and Benelux markets. Target audience: curious and busy professionals 30-60 years old, earning 75k+ € annually. Budget: 6000€, team of 2, timeline: 4 weeks. Ask any clarifying questions."

Treat AI like an experienced colleague, not a search engine. Nielsen Norman Group suggests thinking of AI as an intern that works best with ample instructions, context, and corrections. Give it background, ask it to challenge your assumptions, and iterate rather than accepting the first output.

Using AI for research planning improves both speed and quality by challenging your thinking. As we gain more experience, many of us fall into the trap of favoring one or two research methods out of familiarity. AI models can challenge our mental biases and push us toward methods we might not have considered.

AI helps frame better design research questions. An IDEO study found that executives ideating based on AI-created questions came up with 56% more ideas, 13% more diverse ideas with 27% more detail.

Questions expand the problem space and encourage divergent thinking; examples narrow it and anchor thinking. AI is most powerful when it helps you ask better questions, not when it prescribes answers.

In my AI training workshops for designers, teams consistently report that AI helps them "get rid of the blank canvas problem" during planning. Rather than staring at an empty research plan, they use LLM’s to generate skeleton outlines, then refine based on their domain expertise. One participant noted: "It's like having a thought partner who helps you think through decision making without doing the thinking for you."

Once you've created a plan, review and iterate. Ask for a weekly task list with reminder notifications.

If your team runs research projects often, build a reusable AI research assistant template using Custom GPT, Claude Projects or Dovetail. Same template, business context, and framework each time saves reinventing the wheel.

At Google, UX researcher Alita Kendrick has built specialized GPT instances tailored to her team's needs, moving from basic AI use to "building AI agents that scale research quality and impact." This is the maturity curve: from generic AI tools to custom research copilots that know your domain.

2. How to Power Desk Research with AI Tools

Desk research typically starts your first week—browsing the web, consuming content, analytics, observation.

AI powers desk research by:

- Diving into topics using Manus or Deep Research from Claude, Gemini or ChatGPT

- ching for specific findings across hundreds of sources

- Making findings easily consumable with audio and video

- Analyzing mountains of feedback from support tickets, reviews, and discussions

Deep Research agents typically spend 10-20 minutes going over dozens of sources and creating a 15-20 page summary. Gemini Pro 2.5 does the most detailed research, but GPT-5 is also reliable.

Always check sources and guide AI to use high quality relevant sources.

Think of AI-powered desk research as a tireless intern who can browse hundreds of sources simultaneously. But like any intern, it needs direction and supervision.

Once you've generated several Deep Research reports, use NotebookLM to create an audio or video summary. For quick search queries, Perplexity is most reliable.

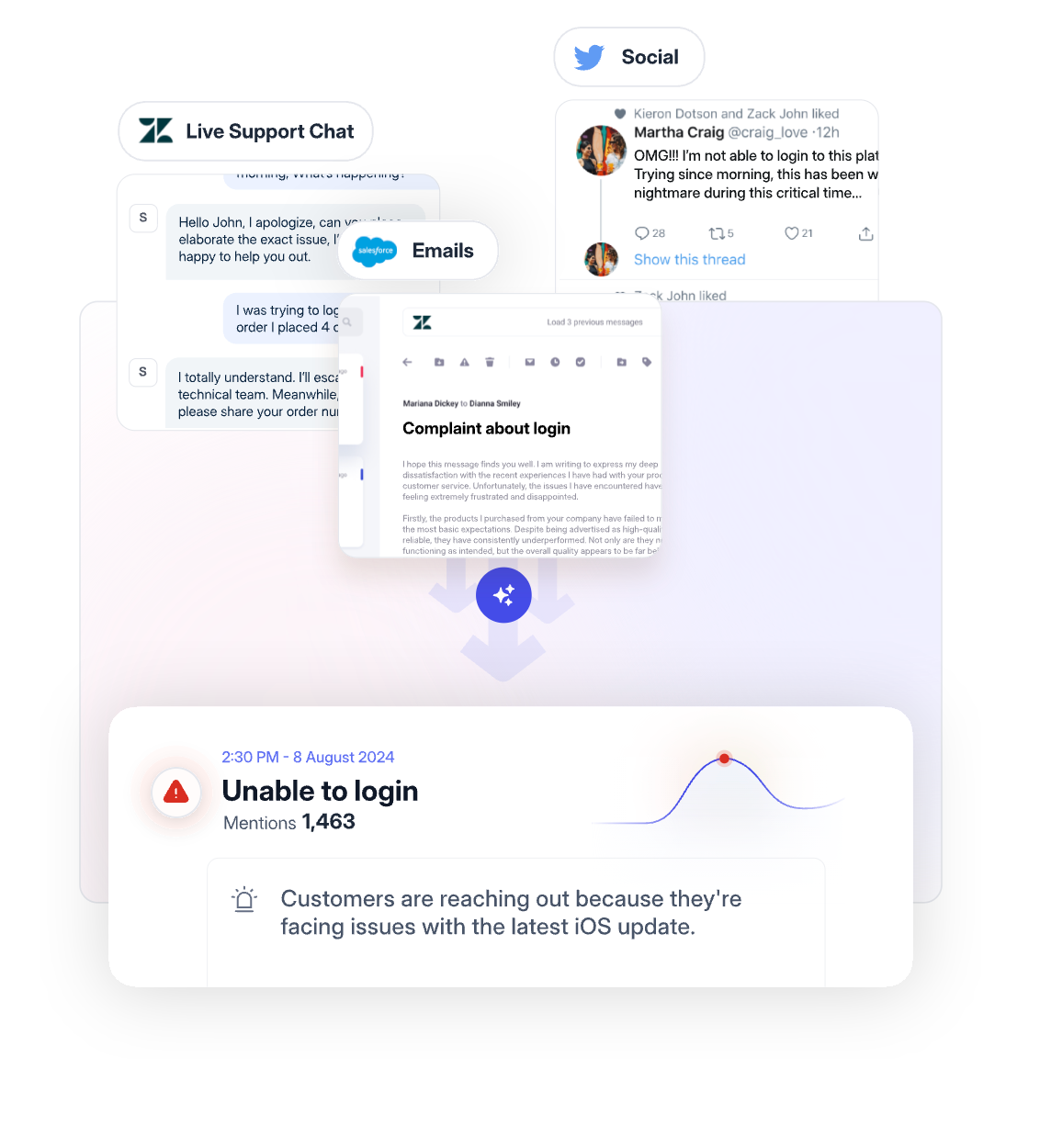

You can also use AI to analyze existing company data. Use an LLM or specialist tool like Sentisum to mine insights from support tickets, reviews or discussions. AI shines here—it's difficult for humans to reliably comb through thousands of messages.

One of my clients in the financial industry, built an internal tool based on GPT-4 to analyze thousands of customer issues from support tickets to uncover insights previously hidden in the minds of individual customer service representatives.

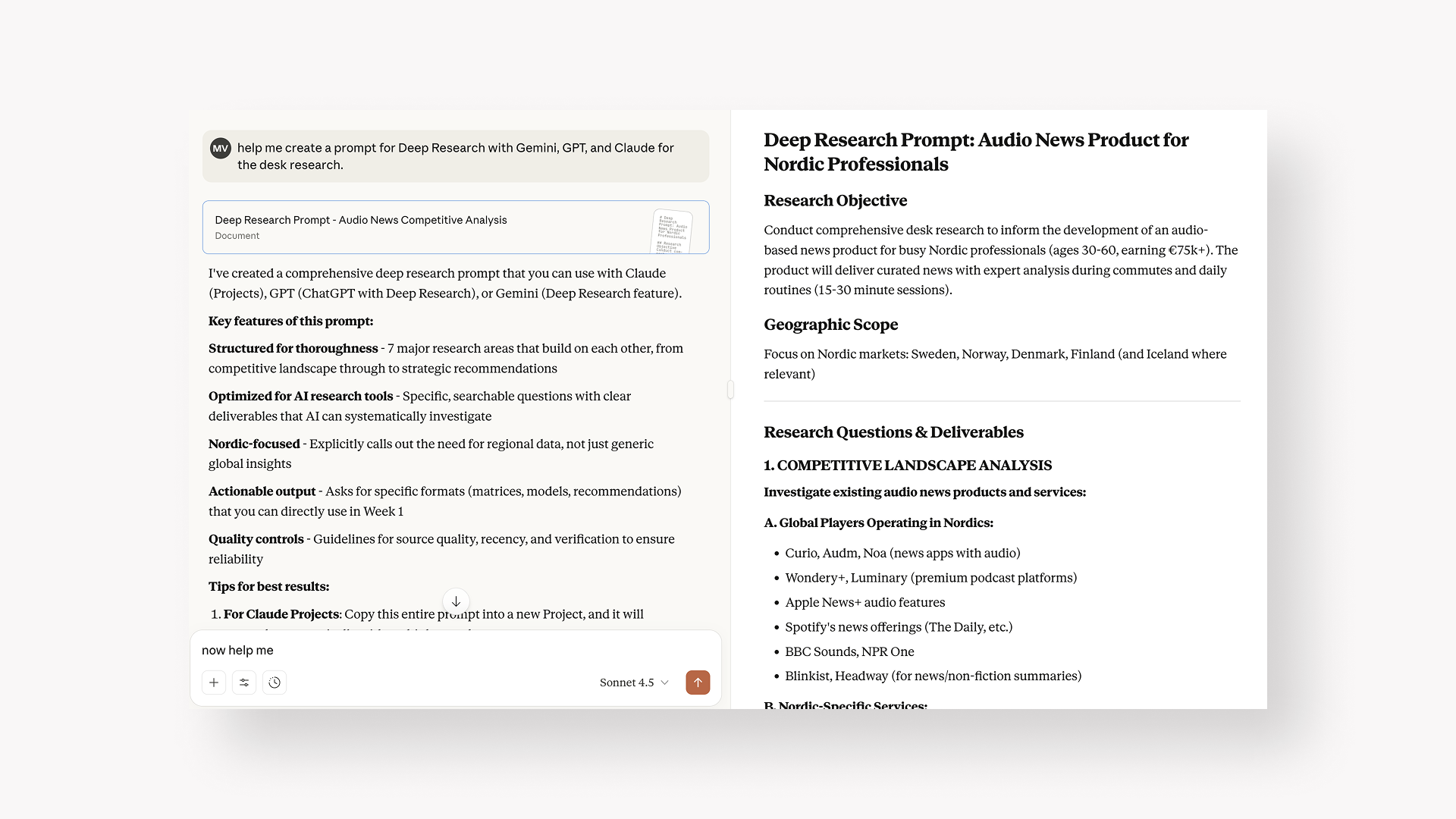

Project example: AI desk research workflow

In our digital audio news product example, continue in the same LLM chat:

FIRST STEP: "Help me create a prompt for Deep Research with Gemini, GPT, and Claude for the desk research."

SECOND STEP: Use this prompt to conduct Deep Research on Gemini, ChatGPT, Claude and Manus.

THIRD STEP: Ask Gemini to combine into one report, and use NotebookLM to create a podcast episode. Add as context for Dovetail if you're using it.

3. How to Recruit Research Participants with AI

Recruiting research participants has traditionally included tons of administrative work. AI streamlines it.

Use your LLM assistant to:

- Create a screener

- Form outreach strategy and messaging

- Select participants from a pool

Advanced AI recruitment platforms platforms like BetaTesting automatically match participants to studies and flag inconsistent survey responses in real-time. They catch "professional testers" by analyzing past performance. This reduces what used to take 5-10 days down to hours.

AI can draft screening questions, suggest follow-up probes, and generate personalized outreach emails. Once you have a pool, AI can rank them by fit and help identify the most diverse, representative sample.

Critical: Maintain human oversight on final selection. AI handles filtering and logistics, but you should review the shortlist to ensure you're not excluding important perspectives or creating bias.

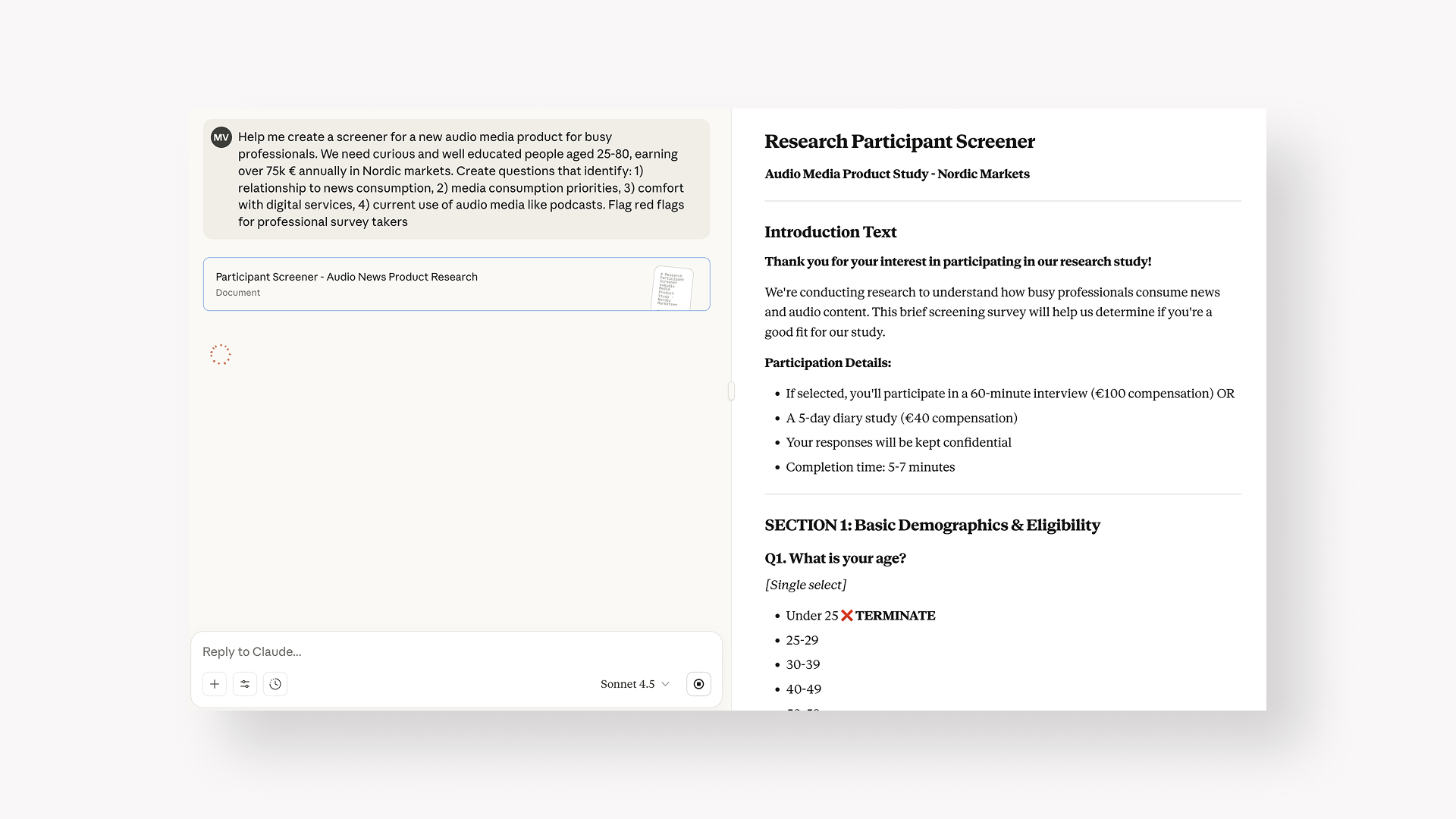

Project example

Continuing with our digital audio project, we can ask for a screener in the same Claude chat as before.

Example AI participant screening prompt: "Help me create a screener for a new audio media product for busy professionals. We need curious and well educated people aged 25-80, earning over 75k € annually in Nordic markets. Create questions that identify: 1) relationship to news consumption, 2) media consumption priorities, 3) comfort with digital services, 4) current use of audio media like podcasts. Flag red flags for professional survey takers."

4. How to Collect Design Research Data with AI

After recruiting, it's time to collect data from participants. This means interacting with real humans through interviews, observation, diary studies, or surveys.

AI assists in design research data collection in several ways:

Note-taking – Tools like Otter, Microsoft CoPilot or ChatGPT transcribe interviews live, freeing you to focus on the discussion.

Sentiment analysis – Dovetail and Looppanel tag emotions (positive, negative, neutral) after processing interviews.

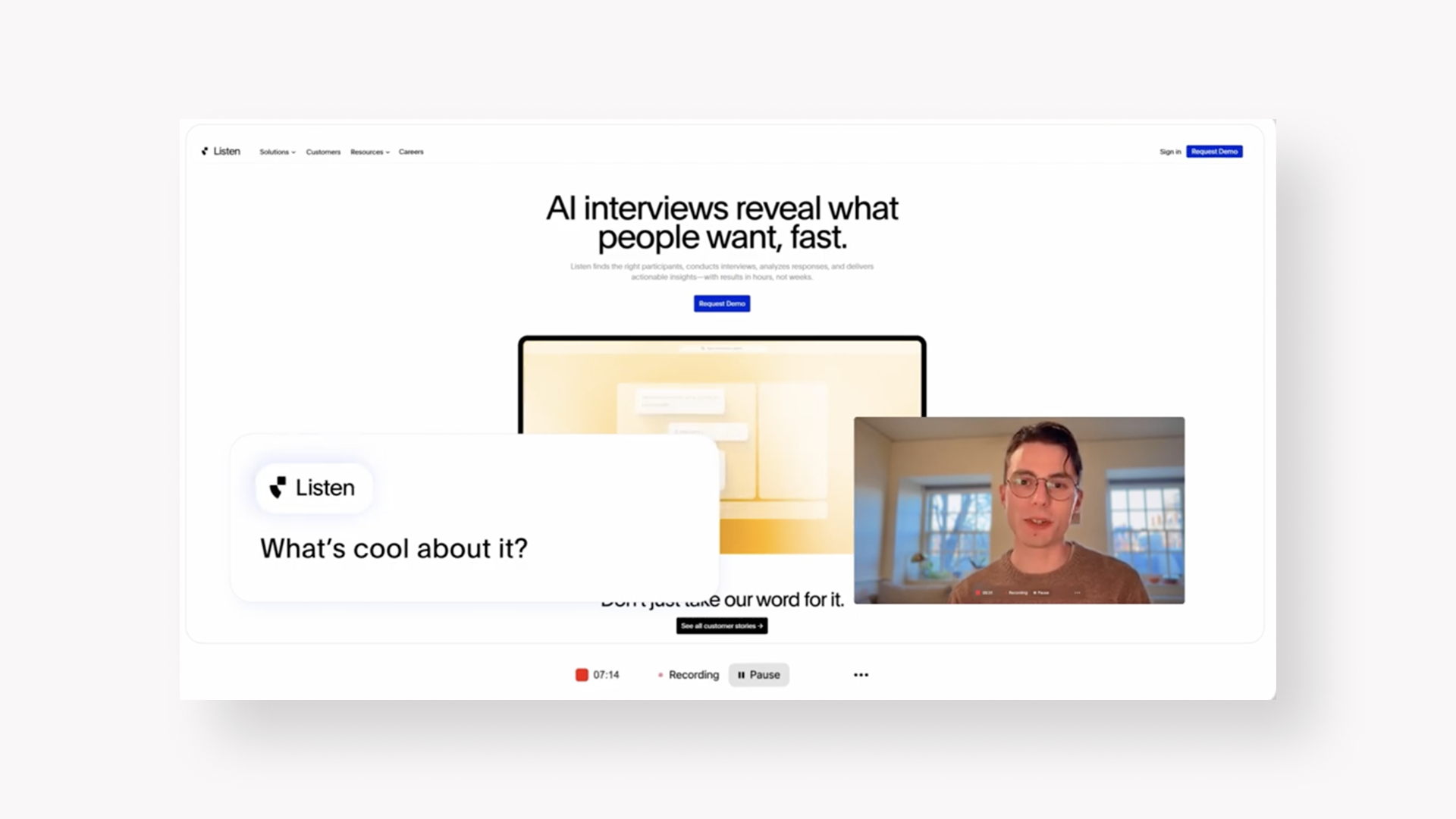

Moderating interviews – AI-monitored interviews with tools like Listenlabs can set up hundreds of asynchronous video interviews.

Observational studies – AI observes thousands of users engaging with your product and produces synthesized findings.

The key is striking the right balance. AI can scale your research efforts and ensure no insights fall through the cracks. But if you take human researchers out of the loop completely, you risk missing the subtle insights that come only from observing tone, body language, and non-verbal cues.

A critical limitation: AI can analyze and mimic the language of sentiment, but it doesn't feel frustration, delight, or confusion. AI might read "Oh, fantastic! I get to update my profile information again" and miss the sarcasm, flagging it as positive. Human researchers catch these nuances.

Another common challenge from my clients: designers worry about data privacy when using AI transcription tools. As one workshop participant put it, "The research content is classified—we can't just feed it into ChatGPT." For sensitive projects, use enterprise tools with proper data handling (like Dovetail or company-approved AI integrations) rather than public LLMs.

Always ensure transparency, data security and privacy in how you use AI.

5. How to Synthesize Design Research Insights with AI

Once you've collected insights from your participants, it's time to make sense of what you've gathered.

AI helps synthesize design research by:

Analyzing within a framework – Claude or Gemini create a first draft of findings. LLMs analyze transcriptions using frameworks like How Might We or Jobs-to-be-done.

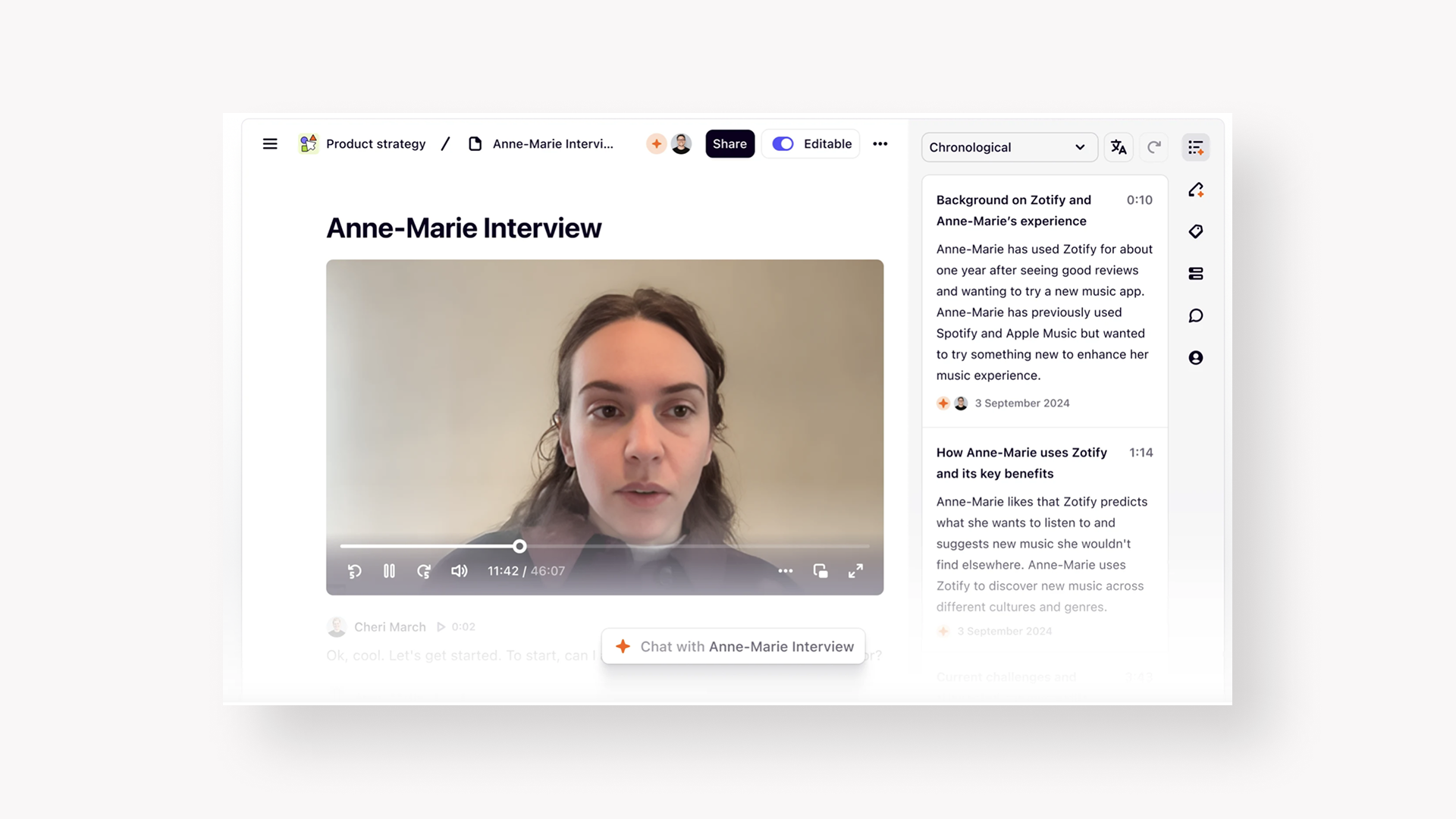

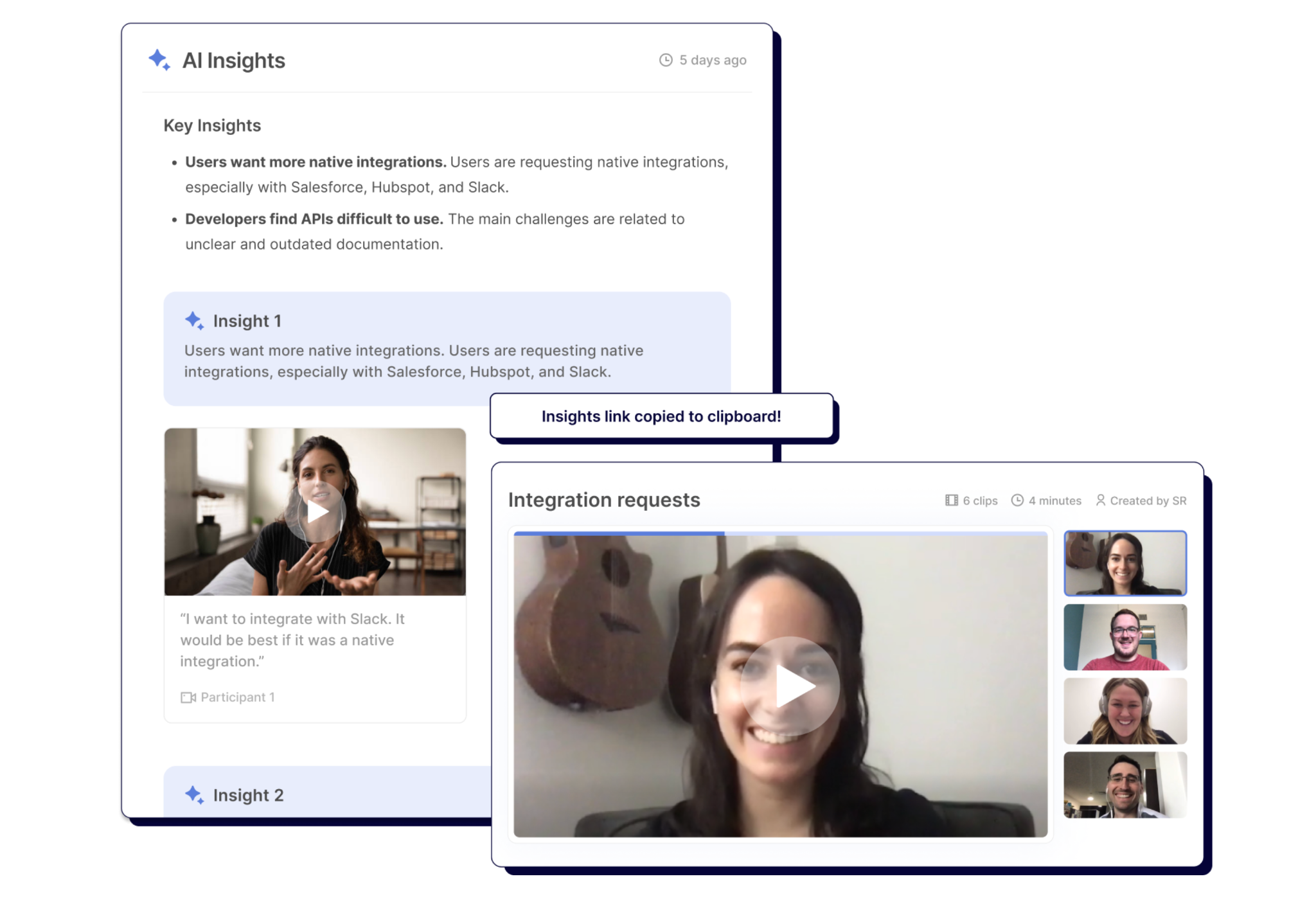

Enterprise tools like Dovetail or Looppanel bring AI-driven tagging, theming and analysis across different sources (tickets, interviews, sales calls) to one hub. Many design teams I've worked with find Dovetail particularly useful.

Combining qualitative and quantitative – Claude can help you dig deeper into why customers aren't converting by analyzing both site analytics (what) with interview transcripts and exit surveys (why).

Working with datasets – NotebookLM lets you upload interview transcripts and ask detailed questions. It uses only that specific dataset as its source and creates audio and video summaries.

A practical tip from Google's Alita Kendrick: do a "halfway check" mid-study. Feed partial transcripts and research questions into AI to see if you're missing key signals. This acts like a second pair of eyes, helping you spot patterns you might overlook and adjust while the study is running—enabling more iterative, adaptive research.

Research from Nielsen Norman Group indicates AI in design processes can increase team efficiency by up to 40%, with some professionals experiencing a 66% productivity boost. The speed gains are real—but only when human researchers remain in the loop to interpret meaning and ensure quality.

In my training workshops, designers describe synthesis as where AI delivers the biggest time savings. One participant shared: "Making synthesis with GPT is very much helping with having less pressure, especially when having less time." Teams use tools like NotebookLM to simplify concepts and summarize information, then apply their expertise to identify which patterns actually matter for their users.

Combine the breadth of AI analysis—combing through thousands of data points—with the depth, intuition and contextual awareness of experienced human researchers.

Project example

Example AI synthesis prompt: In the same AI chat with our digital audio product research project, we can prompt the AI: "Here are 5 interview transcripts of my conversations about a new audio media product for busy professionals. Craft 5 'How Might We' questions to identify how we could create a new useful and delightful audio media experience for them."

Action: Preserve data privacy and security. Never include identifiable information and ensure your model isn't using data for training.

6. How to Communicate Research Insights with AI

Insights don't matter if they're not communicated effectively. AI adds speed, quality, and richness.

Starting with real insights as context, AI tools help you:

Draft presentations that communicate insights clearly using Claude, Gemini or Gamma.

Use storytelling to bring insights to life with personas and journeys using Claude or ChatGPT.

Visualize personas with rich detail using Midjourney.

Looppanel and Grain automatically identify and compile key moments from user sessions—strong emotions, critical struggles, insightful quotes—into video highlight reels. These short clips build stakeholder empathy far more effectively than static quotes in slides.

Always use your real insights as input. LLMs use their world knowledge to add texture and richness.

Platforms like Listenlabs create synthetic personas from your interview data that you can chat with. Use these for quick exploration only—they can't replace the nuance and surprises from real humans.

Critical: Review and iterate on the first AI-produced draft to ensure accuracy and quality. Human researchers must be in-the-loop on all research steps.

Project example

Let’s create personas and journeys for our audio media product research. Continue with Claude to describe three personas based on your research and quantitative data.

"Using the 10 customer interview transcripts I shared and this survey data, help me create 3 different user personas. I want the personas to represent different attitudes and motivations towards using audio media content."

"For each persona, create one customer journey story of how and why they would use an audio news product."

Human-Centered Insights in an Era of AI Agents

We're at an interesting point in human-centered design and research.

AI promises insights at unprecedented price and speed. At the same time, it contains the peril of obvious insights, hallucination echo chambers, and fast food research—something that ticks the box but doesn't nourish us with useful insights.

We need to find the right role for humans.

We lead the research process. We curate relevant insights. We ensure research is grounded in relevant context. We spend time with actual users to uncover deeper insights. We have the taste and wisdom to ensure output is truthful and unlocks a deeper understanding of who we serve.

AI as our research partner helps us progress faster by formulating robust plans, ensuring nothing falls through the cracks with live transcribing, and synthesizing findings across thousands of data points across formats. It brings our insights alive with richer stories and illustrations.

Jakob Nielsen's prediction is stark: a tenfold increase in individual productivity over the next decade will make research "thirty, forty, fifty times cheaper." When the cost of generating an insight plummets, the researcher's value shifts from labor-intensive execution to strategic activities AI can't perform: formulating critical questions, interpreting patterns within business context, and weaving findings into compelling narratives that drive action.

This division of roles will be exacerbated by the new frontier—AI agents. Autonomous AI agents will increasingly create research plans, recruit participants, run moderated interviews, and synthesize findings. Still, it's our role to guide the process with relentless focus on quality, taste, and real human insights.

References and further reading

https://www.nngroup.com/articles/ai-intern/

https://www.ideo.com/2024-ai-research

https://www.usertesting.com/resources/podcast/generative-ai-ux-research